QCIT Group team noitce that, recently a website

Writer: admin Time:2023-08-28 10:43 Browse:℃

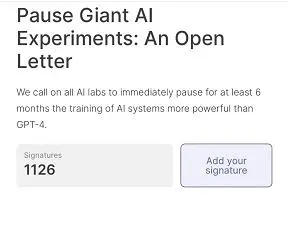

QCIT Group team noitce that, recently a website called the Institute for the Future of Life published a public joint letter titled "Suspension of Large AI Research". QCIT is duubt on his news still, at the beginning of the letter, we saw, "We call on all AI laboratories to temporarily stop AI training that is stronger than GPT-4 for at least six months." As of the press release, the open letter has received 1000 plus signatures.

"Suspend large-scale AI research"

In recent months, AI labs around the world have become completely out of control. They are frantically launching AI competitions to develop and deploy more powerful AI, while no one can understand, predict, or control these AI, even their creators.

Artificial intelligence systems pose significant risks to society and humans. Despite the AI craze in artificial intelligence laboratories in recent months, developing and deploying increasingly powerful digital brains, currently no one can understand, predict, or reliably control AI systems, and there is no corresponding level of planning and management. During the suspension period, the AI laboratory and independent experts should jointly develop and implement a set of advanced shared security protocols for AI design and development, which should be strictly reviewed and supervised by independent external experts.

Simply put, AI systems that are recognized by top AI laboratories and possess human competitive intelligence may pose profound risks to society and humanity.

The widely recognized Asilomar AI principle in the scientific and technological community mentions that advanced artificial intelligence may represent a profound change in the history of life on Earth, and should be planned and managed with appropriate caution and resources.

Someone said that the industrial era is knowledge driven, knowledge driven competition; The digital era is driven by wisdom, the competition between creativity and imagination, the competition for leadership, accountability, and responsibility, and the competition for independent thinking.

Previously, we could only see various artificial intelligence that seemed to belong to the future in movies, but now, it is right in front of us. At the current speed of development, we do not know what capabilities AI will have, so we may really be full of fear and vigilance against it as stated in the open letter.

Half a month ago, GPT-4 had such a significant impact in the technology industry, so someone would surely ask: Will there be GPT-5, 6, or even 7? In fact, a team has long predicted that the OpenAI team will launch a transitional version of GPT-4.5 in September or October 2023, which will be able to handle more complex text, improve coherence, and provide users with more accurate answers. After billions of levels of training, GPT will only be stronger, smarter, and more professional than ever before.

At the end of the open letter, the author mentioned that humans can enjoy the prosperous future brought by AI. After successfully creating a powerful AI system, we can enjoy the rewards of "AI Summer" and design the above system to benefit everyone and give all mankind an opportunity to adapt. Now, our society has suspended other technologies that may have a catastrophic impact. For AI, we should do the same. Let's enjoy a long AI summer instead of entering autumn unprepared.

So, will these AI teams, including so many companies who will "listen" to the recommendations of the open letter? QCIT Group is asking whether this open letter is from GPT or other AI? But anyhow, we must think about our future by ourelves, since AI will think about their one also...